Batch models

Batch models and their associated anomaly rules operate on accumulated data stored in the UBA analytical store. Batch models analyze ingested data over a larger time window, such as the last 24 hours. Batch models typically run overnight due to the need to process large amounts of data.

The following is a selection of the batch models available in Splunk UBA:

- Account Exfiltration Model

- Device Exfiltration Model

- Excessive File Size Change Model

- False Positive Suppression Model

- New Access Model for Box

- Rare File Access Model

- Rare Microsoft Windows Device Access Model Using Authentication Data

- Rare Microsoft Windows Device Access Model Using Login Data

- Rare VPN Login Location Model

- Unusual Volume of Box Downloads per User Model

- Unusual Volume of Box Login Failure Events per User Model

- Unusual Volume of VPN Login Events per User Model

- Unusual Volume of VPN Traffic per User Model

To learn about the batch models of Lateral Movement, VPN, and Time-series, see Lateral Movement model, VPN login related anomaly detection models, and Time-series models.

To learn more about data mapping, see Data mapping for model-based anomalies in Splunk UBA and Data mapping for rule-based anomalies in Splunk UBA in the Get Data into Splunk User Behavior Analytics manual.

Account Exfiltration Model

This model constructs user profiles to identify malicious data movement behaviors, considering various types of data transfer per account. The model analyzes HTTP/Firewall data, processing both inter-firewall-zones, LAN transfers, and WAN transfers to countries that deviate from normal behavior patterns.

The volume of outgoing traffic analyzed by the model is derived from multiple data sources, including firewall logs, web proxy logs, and email logs that contain information on "outgoing" bytes.

You can adjust the minimum mathematical anomaly score (threshold), the minimum suspiciousness threshold (anomalyScoreThreshold), and the maximum number of anomalies (anomalyCountThreshold) from their model registration and configuration files in ModelRegistry.json.

A new parameter of anomalyCountThreshold has been introduced to avoid large numbers of anomalies that might flash into logs due to invalid data onboarding. Spark optimization techniques such as Spark aggregation and pivot transformations are also applied in the model for performance improvements to execution time, max shuffle reads/writes, max disk, and memory spills.

Device Exfiltration Model

This model concentrates on monitoring device activities. Specifically the volume of traffic leaving the network to detect data transfers per device. The model analyzes HTTP/Firewall data, processing both inter-firewall-zones, LAN transfers, and WAN transfers to countries that deviate from normal behavior patterns.

The volume of outgoing traffic analyzed by the model is derived from multiple data sources, including firewall logs, web proxy logs, and email logs that contain information on "outgoing" bytes.

You can adjust the minimum mathematical anomaly score (threshold), the minimum suspiciousness threshold (anomalyScoreThreshold), and the maximum number of anomalies (anomalyCountThreshold) from their model registration and configuration files in ModelRegistry.json.

A new parameter of anomalyCountThreshold has been introduced to avoid large numbers of anomalies that might flash into logs due to invalid data onboarding. Spark optimization techniques such as Spark aggregation and pivot transformations are also applied in the model for performance improvements to execution time, max shuffle reads/writes, max disk, and memory spills.

Excessive File Size Change Model

This model discovers data collection practices in cloud file stores that precede data exfiltration. Specifically, the model identifies files that grow unexpectedly as a byproduct of data collection. Examples of this include significant copy-pasting of data to an exfiltration file, or collecting files for exfiltration to an archive or zip file.

The model tracks and qualifies jumps in sizes of files over time.The model separately qualifies size jumps for three classes of files - small, regular, and large sized files. Each class has a very different size increase property.

The model establishes a number of additional features related to individual instances of file size jumps, as well as averages and extremes of these jumps. A number of model features are developed based on folders in which a file can be found, and the users and sharing practices related to all copies of the observed file and its folders. This large assembly of features helps the model distinguish between benign and suspicious file size jumps.

The model is configurable and can be set to analyze data from all file-related data sources together, to focus on each individual data source, or to exclude some data sources. All pre-trained features can be specifically adapted to each use case.

False Positive Suppression Model

The False Positive Suppression Model is designed to utilize the tagging of false positives within anomaly detections to realize automatic false alert suppression. By employing self-supervised deep learning algorithms, the model generates a vectorized feature representation for anomalies. Subsequently, the model employs a ranking mechanism based on user feedback to prioritize newly detected anomalies. Those anomalies exceeding a predetermined similarity threshold are automatically identified as false positives, providing a user-friendly suppression of false alerts.

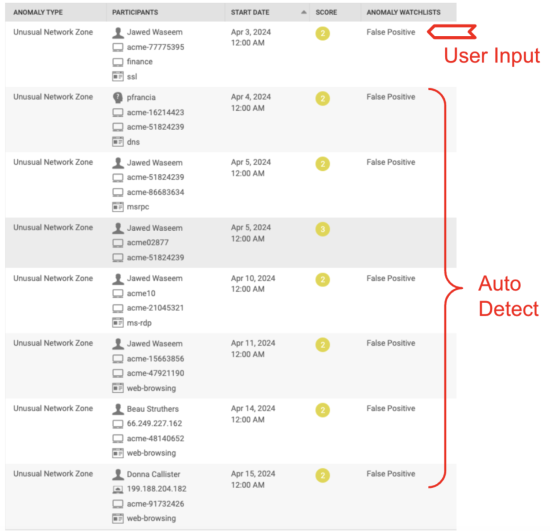

As illustrated in the following image, a user has identified a false positive in Unusual Network Zone anomalies. Subsequently, the model automatically detected similar false positives in the following days. The detected false positive anomalies are intentionally not being hidden, and instead adding a tag, so that you can still see them and avoid missing true positives.

The False Positive Suppression Model runs every day at 3:30 am, following the execution times of cron jobs for all other offline models. This ensures the False Positive Suppression Model effectively collects and processes all the anomalies generated daily by these offline models. The anomalies produced by streaming models and rule-based detections up to this point are also processed daily.

The sensitivity of False Positive Suppression can be adjusted using the thresholdSimilarity parameter. This parameter is defined through the model registry. The default value of thresholdSimilarity is 0.99. You can increase this value for stricter detection of false positives, or choose a smaller value for less strict suppression.

The False Positive Suppression Model only learns from the tagging of false positive operations and cannot learn from any untagging operations.

Consequently, if you untag a false positive from anomalies, the automatically tagged false positives by this model that were learned from users' tagging operations, cannot be subsequently untagged. You must manually untag these automatically-tagged false positives when necessary.

In Splunk UBA version 5.4.1 and higher a Large Language Model (LLM) connector is an available enhancement for the False Positive Suppression Model. The LLM connector leverages capabilities from GenAI models. When you use the LLM connector, the model adheres to the thresholdRanking parameter. This parameter defines the maximum number of false alerts it will classify.

Turn on the LLM connector by setting the advancedModelOption parameter to AZ. You must also provide the LLM endpoint URL and access token, specified by the apiURL and apiKey parameters, respectively.

Using the LLM endpoint APIs involves sending data to external servers, and API calls can incur additional costs. Carefully consider data privacy, legal compliance, and budget constraints when deciding to use the LLM connector in UBA.

New Access Model for Box

This model identifies suspicious first time accesses to files in cloud file stores. This access includes any user activity or event type on a file such as previewing, moving, syncing, and deleting. The model qualifies whether an access is suspicious or not based on the user history of file accesses, as well as from file accesses of other similarly behaving users, and from the properties of files themselves.

The model acts as a recommender system that can also accept partial external guidance from human expert rules. Some of the components of the recommender system include constructing an access graph which associates users to files, enriching the graph's edges with features describing access properties, adding some of the features to represent human expert rules, running matrix factorization on that graph, and letting the resulting recommender system identify which first time accesses that actually occurred align with the ones predicted by the algorithm/model.

The model does not come pre-trained but re-trains the recommender system on live user data. This training may be processing prohibitive at scale. To reduce training costs parts of the training run optionally or rarely. Hyper parameter tuning of the underlying recommender system is done once a month by default, and k-fold validation of the training process optional. The only component of the training that is continually done is training of the recommender system on user data.

The model offers many parameters that you can configure in the Model Registry including which file extensions are considered risky, which user actions are considered risky, and whether the model includes information about file access activities of the user's peers.

Rare File Access Model

This model looks for rare accesses to files by individual users. Rarity of a file access is established by tracking a number of features related to users, types of access activities, services involved in the file accesses, and a potential involvement of other users in these accesses - such as in collusion or impersonation. The model establishes that a file access is rare, and also scores the risk of a rare access. The more observed features exhibit rare behavior, the more certain that an access is risky.

This model tracks several features including rarity of accesses to each file, rarity of specific access actions such as reading, editing, or deleting, as well as rarity of applications that facilitate the access such as access using a Chrome browser, Word, Excel, or Acrobat Reader. The model also tracks many conditional probability features. For example, given an application, how rare is a specific access action, given an action how rare is access application, given a user how rare is an access action or application for that user, and given a user who initiated the access, how rare is the user to whom the file was sent or shared to.

Other tracking features can be added in the Model Registry to further separate benign from suspicious instances of rare accesses to files. Model Registry also offers configurable parameters by which you can focus the model to look into specific kinds of raw events, specific devices, and users.

Rare Microsoft Windows Device Access Model Using Authentication Data

This model looks for user authentications to various devices using Active Directory or Windows events. The authentication can be an explicit user login or a resource-access authentication such as access to web pages on a web server. Rarity of a user visiting a device is established by tracking a number of features related to both users and devices individually, as well as by user-device associations. The more observed features exhibit rare behavior, the more certain that the association is risky.

The model can optionally include behaviors of a user and/or device peer group. The model establishes that a user-device association is rare, and evaluates/scores the risk of a suspicious association. The model also performs alert suppression. If more than a pre-configured number of users or devices share the same rarity features, the alert is not raised.

The model is configurable such that other tracking features can be added in the Model Registry. Model Registry also offers configurable parameters by which you can focus the model to look into specific kinds of raw events, specific devices, and users.

Rare Microsoft Windows Device Access Model Using Login Data

This model looks for explicit user logins to devices that happen on a Windows login screen. Rarity of a user login to a device is established by tracking a number of features related to both users and devices individually, as well as by their associations. The model optionally includes user and/or device peer group behaviors. The more observed features exhibit rare behavior, the more certain that the association is risky.

The model establishes that a user-device association is rare, and also evaluates/scores the risk of the rare association. The model also performs alert suppression. If more than a pre-configured number of users or devices share the same rarity features, the alert is not raised.

This model tracks individual features, such as the total number of logins for a given user. The model also tracks many conditional probability features. For example, given a device how rare is the presence of a given user out of all users, given a user how rare is their presence on a given device given all devices on which the user appears, given a device how rare is the login type the user used, given a user how rare is the employed login type in general, given a login process how rare is the code that invoked it, and given a user how rare is the the domain of the device to which the user is login in.

The model is configurable, such that other tracking features can be added in the Model Registry. Model Registry also contains configurable parameters by which you can focus the model to look into specific kinds of raw events, specific devices, and users.

Rare VPN Login Location Model

This model looks into users accessing the company from rare VPN locations. Locations are reported as countries. Suspicious rarity is determined by tracking a number of features related to users, devices, and the source countries from which users initiated VPN connections. The model establishes that access is from a rare country, and scores the related risk. The more observed features exhibit rare behavior, the more certain that the access is risky.

This model tracks the rarity of a source country in general, as well as several conditional features. Conditional features include rare source countries for a given user, rare source countries for a given device, and rare source countries for a given department or peer group in the company. Other features of interest for rarity and noise suppression can be added in the Model Registry. Model Registry also contains configurable parameters by which you can focus the model to look into specific kinds of raw events, specific devices, and users.

Rare Events Model Scaling

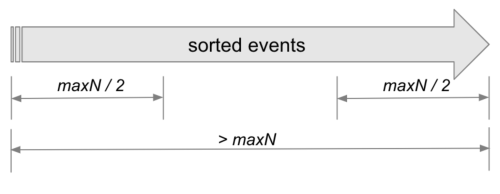

Use Rare Events Model Scaling to address memory usage issues that might occur during Rare Event Model execution. The cardinalitySizeLimit parameter sets a maximum limit on the number of entities (maxN) the model will process.

As shown in the following image, if the maximum number of entities surpasses the specified cardinalitySizeLimit value, the model will focus solely on modeling both ends of the maxN/2 sorted events. This approach ensures that the model doesn't exhaust resources during execution.

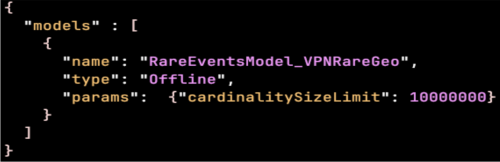

The default value of cardinalitySizeLimit is 10,000,000. You can customize this parameter to any integer value larger than zero in the local model register file, as shown in the following file path:

/etc/caspida/local/conf/modelregistry/offlineworkflow/ModelRegistry.json

The following image shows an example of a local model registry file:

The cardinalitySizeLimit parameter is applicable to the following Rare Event Models:

Each Rare Event Model observes its own value of cardinalitySizeLimit as specified in the individual local model registry block.

- RareEventsModel_HTTPUserAgentString

- RareEventsModel_FWPortApplication

- RareEventsModel_WindowsLogs

- RareEventsModel_WindowsLogins

- RareEventsModel_WindowsAuthentications

- RareEventsModel_NetworkCommunicationRareGeo

- RareEventsModel_VPNRareGeo

- RareEventsModel_ExternalAlarm

- RareEventsModel_FileAccess

- RareEventsModel_FWScopePortApp

- RareEventsModel_UnusualProcessAccess

- RareEventsModel_RareEmailDomain

- RareEventsModel_UnusualDBActivity

Unusual Volume of Box Downloads per User Model

This model tracks various outliers in time series data. For example, the model can identify anomalies in the volume of downloaded data, or the sum of downloaded file sizes, which can indicate data exfiltration.

Outliers are notable offsets from: baseline estimates. For example, moving averages of estimated distributions, offsets from percentiles of estimated distributions, or offsets from hard thresholds established by some specific domain expertise. In this instance, the baseline is a Gaussian moving average with extreme values removed.

The model derives conclusions from several sources of reference: the history of the observed entity (user, device, etc.), the history of peer groups of the observed entity, and from the global behavior of all entities in the company. Time wise, the model looks into daily behaviors such as changes in daily statistics, and weekly behaviors such as offsets from daily statistics from weekly baseline.

You can use the model to spot outliers across many dimensions including instantaneous values, distributions, different time scales, personal behaviors, peer group behaviors, and global company behaviors. Each offset is scored. The more score is collected, the more suspicious the outlier is.

Unusual Volume of Box Login Failure Events per User Model

This model tracks various outliers in time series data. The model identifies outliers in counts of triggered events. These anomalies are indicators of a foundational change in user or device activity, which are often security related. Outliers in this model are notable offsets from baseline estimates such as moving averages, offsets from percentiles of the estimated distributions, or offsets from hard thresholds established by some specific domain expertise.

The model derives conclusions from several sources of reference including the history of the observed entity (user, device.), the history of peer groups of the observed entity, and from the global behavior of all entities in the company. Time wise, the model looks into daily behaviors such as changes in daily statistics, and weekly behaviors such as offsets of daily statistics from weekly baselines.

You can use this model to spot outliers across many dimensions including instantaneous values, distributions, different time scales, personal behaviors, peer group behaviors, and global company behaviors. Each offset is scored. The more score is collected, the more suspicious the outlier is.

Unusual Volume of VPN Login Events per User Model

This model tracks various outliers in time series data that counts the VPN login events of individual users. Outliers in this model are notable offsets from baselines estimates such as moving averages of estimated distributions, offsets from percentiles of estimated distributions, or offsets from hard thresholds established by some specific domain expertise.

The model derives conclusions from several sources of reference including the history of the observed entity (user, device), the history of peer groups of the observed entity, and from the global behavior of all entities in the company. Time wise, the model looks into daily behaviors such as changes in daily statistics, and weekly behaviors such as offsets from daily statistics from weekly baselines.

You can use this model to spot outliers across many dimensions including instantaneous values, distributions, different time scales, personal behaviors, peer group behaviors, and global company behaviors. Each offset is scored. The more score is collected, the more suspicious the outlier is.

Unusual Volume of VPN Traffic per User Model

This model tracks outliers in various kinds of time series data for the volume of transmitted data through VPN sessions for individual users. Outliers are notable offsets from baseline estimates such as moving averages of estimated distributions, offsets from percentiles of estimated distributions, or offsets from hard thresholds established by some specific domain expertise.

The model derives conclusions from several sources of reference including the history of the observed entity (user, device), the history of peer groups of the observed entity, and from the global behavior of all entities in the company. Time wise, the model looks into daily behaviors such as changes in daily statistics, and weekly behaviors such as offsets of daily statistics from weekly baselines.

You can use this model to spot outliers across many dimensions including instantaneous values, distributions, different time scales, personal behaviors, peer group behaviors, and global company behaviors. Each offset is scored. The more score is collected, the more suspicious the outlier is.

| Splunk UBA models overview | Lateral Movement model |

This documentation applies to the following versions of Splunk® User Behavior Analytics: 5.4.1, 5.4.1.1, 5.4.2

Download manual

Download manual

Feedback submitted, thanks!